Cornelis Cort, The Last Judgment Triptych, engraving on laid paper, plate: 33.5 × 49.2 cm (13 3/16 × 19 3/8 in.) sheet: 37.5 × 52.1 cm (14 3/4 × 20 1/2 in.), 1570 1560, https://www.nga.gov/collection/art-object-page.52407.html. Courtesy National Gallery of Art, Washington

Cornelis Cort, The Last Judgment Triptych, engraving on laid paper, plate: 33.5 × 49.2 cm (13 3/16 × 19 3/8 in.) sheet: 37.5 × 52.1 cm (14 3/4 × 20 1/2 in.), 1570 1560, https://www.nga.gov/collection/art-object-page.52407.html. Courtesy National Gallery of Art, Washington

OpenAI have just released two new models, 'o1-preview' and 'o1-mini'—both of which are in preview, in spite of the name of the later model—and I think there are a few important points to be made.

OpenAI claim that the new models use 'reasoning', which is anthropomorphising—to attribute human intentions or emotions to non-human entities—and just marketing rubbish. From an Ars Technica article1:

Just after the OpenAI o1 announcement, Hugging Face CEO Clement Delangue wrote, "Once again, an AI system is not 'thinking,' it's 'processing,' 'running predictions,'... just like Google or computers do. Giving the false impression that technology systems are human is just cheap snake oil and marketing to fool you into thinking it's more clever than it is."

It's easy to believe there's deus ex machina in what OpenAI and other companies do. However, what is it that they do?

As Watumull, Chomsky, and Roberts have concluded2:

However useful these programs may be in some narrow domains (they can be helpful in computer programming, for example, or in suggesting rhymes for light verse), we know from the science of linguistics and the philosophy of knowledge that they differ profoundly from how humans reason and use language. These differences place significant limitations on what these programs can do, encoding them with ineradicable defects.

Chomsky later elaborated on the collaborative article3, where he not only explained differences between science and engineering but mainly spoke about the unquestionable limitations of all AI systems. AI is, at its best, a probability device that delivers possibly-erroneous answers, while often sounding extremely confident4.

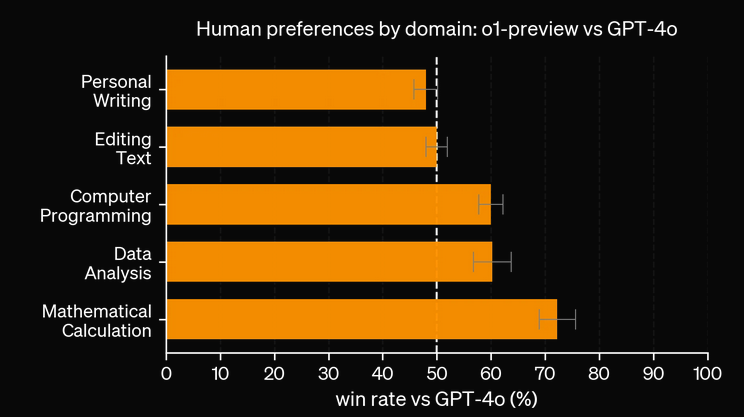

A chart by OpenAI6.

One thing about this chart: it's made by OpenAI, who have previously published exaggerated and erroneous benchmark results7; this is in line with their brand: fiction above fact.

As the chart image in this post shows, the OpenAI model 'o1-preview' is better than some other OpenAI models at some things, and not very good at other things, like 'personal writing', whatever that may be, according to OpenAI.

Morgan Stanley recently concluded that datacenters across the globe will produce three times more greenhouse gases than if generative AI hadn't existed5.

| Model | Accuracy (%) | Tokens | Total Cost ($) | Median Latency (s) | Speed (tokens/sec) |

|---|---|---|---|---|---|

| OpenAI gpt-4o | 52.00 | 7482 | 0.14310 | 1.60 | 48.00 |

| Together meta-llama/Meta-Llama-3.1-405B-Instruct-Turbo | 50.00 | 7767 | 0.07136 | 2.00 | 46.49 |

| OpenAI o1-mini | 50.00 | 29820 | 0.37716 | 4.35 | n/a |

| OpenAI o1-preview | 48.00 | 38440 | 2.40306 | 9.29 | n/a |

| Anthropic claude-3.5-sonnet-20240620 | 46.00 | 6595 | 0.12018 | 2.54 | 48.90 |

| Mistral large-latest | 44.00 | 5097 | 0.06787 | 3.08 | 18.03 |

| Groq llama-3.1-70b-versatile | 40.00 | 5190 | 0.00781 | 0.71 | 81.62 |

LLM benchmark detail by Kagi8.

The two new OpenAI models require far more power than most other powerful and commercially available models from other companies. Just take a look at the table in one of the attached images in this post, which is from Kagi's LLM Benchmarks page: the new OpenAI models require around five times as many tokens to produce calculations and the monetary costs are even higher; you'll pay nearly 17 (!) times more to use 'o1-preview' compared with 'gpt-4o' (both from the same company), partly to see more finely-tuned responses in some fields of search results and partly to see the responses wrapped up in so-called reasoning. All of this at a—very generalised, according to the Kagi page—accuracy rate of 52%.

Interestingly enough, if you'd ask me questions and find out I lied to you half of the time, and I'd say wait, I also delivered you 'reasoning' behind my answers, you'd stop listening to me. I keep this thought at the back of my mind when speaking to people who believe AI is a kind of oracle and evolution of search engines (which is just not true; one should not use AI chatbots as search engines). Deus ex machina, indeed.

AI is surely here to stay, even though recklessness will be held to account, for example, as in the USA, where government has forced some major companies to divulge their models9. And, as some of the articles above clearly state, AI is very good for use in some very explicit and testable ways, as with programming.

However, as Chomsky proves, language acquisition is something that comes natural to any four-year-old human being, and will never be understood by AI.

At the end of the day, if 'reason' means to further hurl humanity toward climate catastrophe, I won't swallow OpenAI terminology, which is just reckless marketing designed to sell a product that is, at its best, wrong about half of the time.

-

Edwards, Benj. “OpenAI’s New ‘Reasoning’ AI Models Are Here: O1-Preview and O1-Mini.” Ars Technica. Last modified September 12, 2024. Accessed September 13, 2024. https://arstechnica.com/information-technology/2024/09/openais-new-reasoning-ai-models-are-here-o1-preview-and-o1-mini/. ↩

-

Chomsky, Noam, Ian Roberts, and Jeffrey Watumull. “Opinion | Noam Chomsky: The False Promise of ChatGPT.” The New York Times, March 8, 2023, sec. Opinion. Accessed April 3, 2023. https://www.nytimes.com/2023/03/08/opinion/noam-chomsky-chatgpt-ai.html. ↩

-

Chomsky, Noam, and C.J. Polychroniou. “Noam Chomsky Speaks on What ChatGPT Is Really Good For.” Chomsky.Info. Last modified May 3, 2023. Accessed September 13, 2024. https://chomsky.info/20230503-2/. ↩

-

Harrison, Maggie. “ChatGPT Is Just an Automated Mansplaining Machine.” Futurism. Last modified February 8, 2023. Accessed April 3, 2023. https://futurism.com/artificial-intelligence-automated-mansplaining-machine. ↩

-

Robinson, Dan. “Datacenters to Emit 3x More Carbon Dioxide Because of GenAI.” The Register. Last modified September 6, 2024. Accessed September 9, 2024. https://www.theregister.com/2024/09/06/datacenters_set_to_emit_3x/. ↩

-

OpenAI. “Learning to Reason with LLMs,” September 12, 2024. https://openai.com/index/learning-to-reason-with-llms/. ↩

-

Sullivan, Mark. “Did OpenAI’s GPT-4 Really Pass the Bar Exam?” The Fast Company, April 2, 2024. https://www.fastcompany.com/91073277/did-openais-gpt-4-really-pass-the-bar-exam. ↩

-

“Kagi LLM Benchmarking Project | Kagi’s Docs.” Last modified September 12, 2024. Accessed September 13, 2024. https://help.kagi.com/kagi/ai/llm-benchmark.html. ↩

-

Feiner, Lauren. “OpenAI and Anthropic Will Share Their Models with the US Government.” The Verge. Last modified August 29, 2024. Accessed September 13, 2024. https://www.theverge.com/2024/8/29/24231395/openai-anthropic-share-models-us-ai-safety-institute. ↩